Table of Contents

Artificial intelligence (AI)-powered writing tools are becoming increasingly popular among researchers. AI tools can improve several important aspects of writing, such as readability, grammar, spelling, and tone, providing authors with a competitive edge when drafting grant proposals and academic articles. In recent years, there has also been an increase in the use of “Generative AI,” which can produce write-ups that appear to have been drafted by humans. However, despite AI’s enormous potential in academic writing, there are several significant pitfalls in its use.

Inauthentic Sources

AI tools are built on rapidly evolving deep learning algorithms that fetch answers to your queries or “prompts”. Owing to advances in computation, and the rapid growth in the amount of data that algorithms can access, these tools are often accurate in their answers. However, at times AI can make mistakes and give you inaccurate data. What is worrying is, this data may look authentic at a first glance and increase the risk of getting incorporated in research articles. Failing to scrutinise information and data sources provided by AI can therefore impair scientific credibility and trigger a chain of falsification in the research community.

Why Human Supervision Is Advisable

AI-generated output is frequently generic, matched with synonyms, and may not be able to critically analyse the scientific context when writing manuscripts.

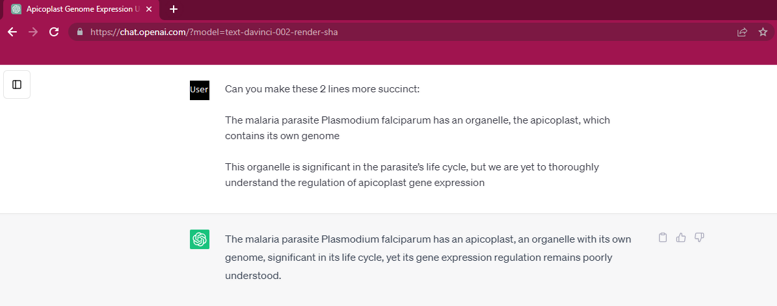

Consider the following example, where the AI ‘ChatGPT’ was used to generate a one-line summary of the following sentences:

The malaria parasite Plasmodium falciparum has an organelle,the apicoplast, which contains its own genome.

This organelle is significant in the Plasmodium’s lifecycle, but we are yet to thoroughly understand the regulation of apicoplast gene expression.

The following is a human-generated one-line summary:

The malaria parasite Plasmodium falciparum has an organelle that is significant in its lifecycle called an apicoplast, which contains its own genome—but the regulation of apicoplast gene expression is poorly understood.

On the other hand, the AI-generated summary is as follows:

The malaria parasite Plasmodium falciparum has an apicoplast, an organelle with its own genome, significant in its life cycle, yet its gene expression regulation remains poorly understood.

In the AI-generated text, it is not clear what ‘its’ refers to in each instance of because it could either refer to Plasmodium falciparum or it could refer to the apicoplast. Moreover, while the expression ‘gene expression regulation’ is technically correct, the sentence structure and writing style is superior if you write ‘regulation of gene expression’.

This is why we need humans to supervise AI bots and verify the accuracy of all information submitted for publication. We request that authors who have used AI or AI-assisted tools include a declaration statement at the end of their manuscript where they specify the tool and the reason for using it.

An example of AI-generated text using the software ChatGPT

Data Leakage

AI is now an integral part of scientific research. From data collection to manuscript preparation, AI provides ways to improve and expedite every step of the research process. However, to function, AI needs access to data and adequate computing power to process them efficiently. One way in which many AI applications meet these requirements is by having large, distributed databases and dividing the labour among several individual computers. These AI applications need to stay connected to the internet to work. Therefore, researchers who upload academic content from unpublished papers to platforms like ChatGPT are at a higher risk of data leakage and privacy violations.

To address this issue, governments in various countries have decided to implement policies. Italy, for example, banned ChatGPT in April 2023 due to privacy concerns, but later reinstated the AI app with a new privacy policy that verifies users’ ages. The European Union is also developing a new policy that will regulate AI platforms such as ChatGPT and Google Bard. The US Congress and India’s IT department have also hinted at developing new frameworks for AI compliance with safety standards.

Elsevier also strives to minimize the risk of data leakage. Our policy on the use of AI and AI-assisted technologies in scientific writing aims to provide authors, readers, reviewers, editors, and contributors with more transparency and guidance.

Legal and Ethical Restrictions on Use

Most publishers allow the use of AI writing tools during manuscript preparation as long as it is used to improve, and not wholly generate, sentences. Elsevier’s policy also allows authors to use AI tools to improve the readability and language of their submissions but emphasises that the generated output is ultimately reviewed by the author(s) to avoid mistakes. Moreover, we require authors to keep us informed and acknowledge the use of AI-assisted writing during the submission process. Information regarding this is included in the published article in the interest of transparency. Visit this resource for more details.

We must know that AI programs are not considered authors of a manuscript, and since they do not receive the credit, they also do not bear responsibility. Authors are solely responsible for any mistakes in AI-assisted writing that find their way into manuscripts.

AI-assisted writing is here to stay. While it is advisable to familiarise oneself with AI writing technology, it is equally advisable to be aware of its risks and limitations.

Need safe and reliable writing assistance? Experts at Elsevier Author Services can assist you in every step of the manuscript preparation process. Contact us for a full list of services and any additional information.